To Iterate or Not to Iterate? (Part 2)

x + e x = 0

Suppose that x is a complex number in general, with a form A + B i (where i = − 1 ) .

Find the solution ( x ) to the above equation with the minimum value of A 2 + B 2 , subject to the constraint that ( B = 0 ) .

Enter the corresponding value of A 2 + B 2 , to three decimal places.

The answer is 4.636.

This section requires Javascript.

You are seeing this because something didn't load right. We suggest you, (a) try

refreshing the page, (b) enabling javascript if it is disabled on your browser and,

finally, (c)

loading the

non-javascript version of this page

. We're sorry about the hassle.

2 solutions

Nice. I didn't know about this before. Thanks.

Did your way, but in other way answer is wrong. Can you tell'em where it's wrong?

x + e x = 0 d x d ( x + e x ) = d x d 0 d x d x + d x d e x = 0 1 + e x = 0 e x + 1 = 0 e x + 1 = e i π + 1 e x = e i π x = i π A + i B = 0 + i π A = 0 , B = π ∣ x ∣ = A 2 + B 2 = 0 + π 2 = π ≈ 3 . 1 4 1 5

Log in to reply

The derivation is wrong. f ( x ) = 0 implies d x d f ( x ) = 0 only if the first equation is satisfied for all values of x .

A simple example :

f ( x ) = x 2 + 3 x + 2 = 0 and d x d f ( x ) = 2 x + 3 = 0 .

Solving the first equation gives x = − 1 , − 2 and the second equation gives x = − 3 / 2 .

In fact, the second equation gives the minimum/maximum/inflection in the graph of f ( x ) .

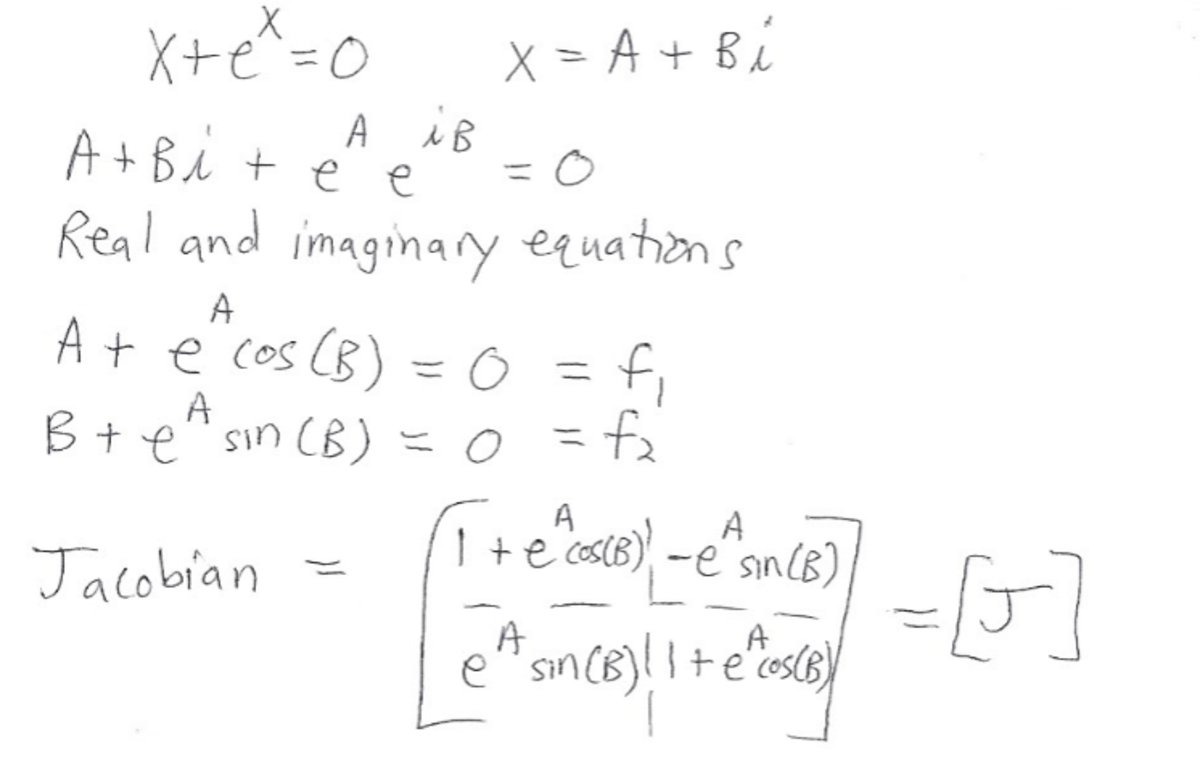

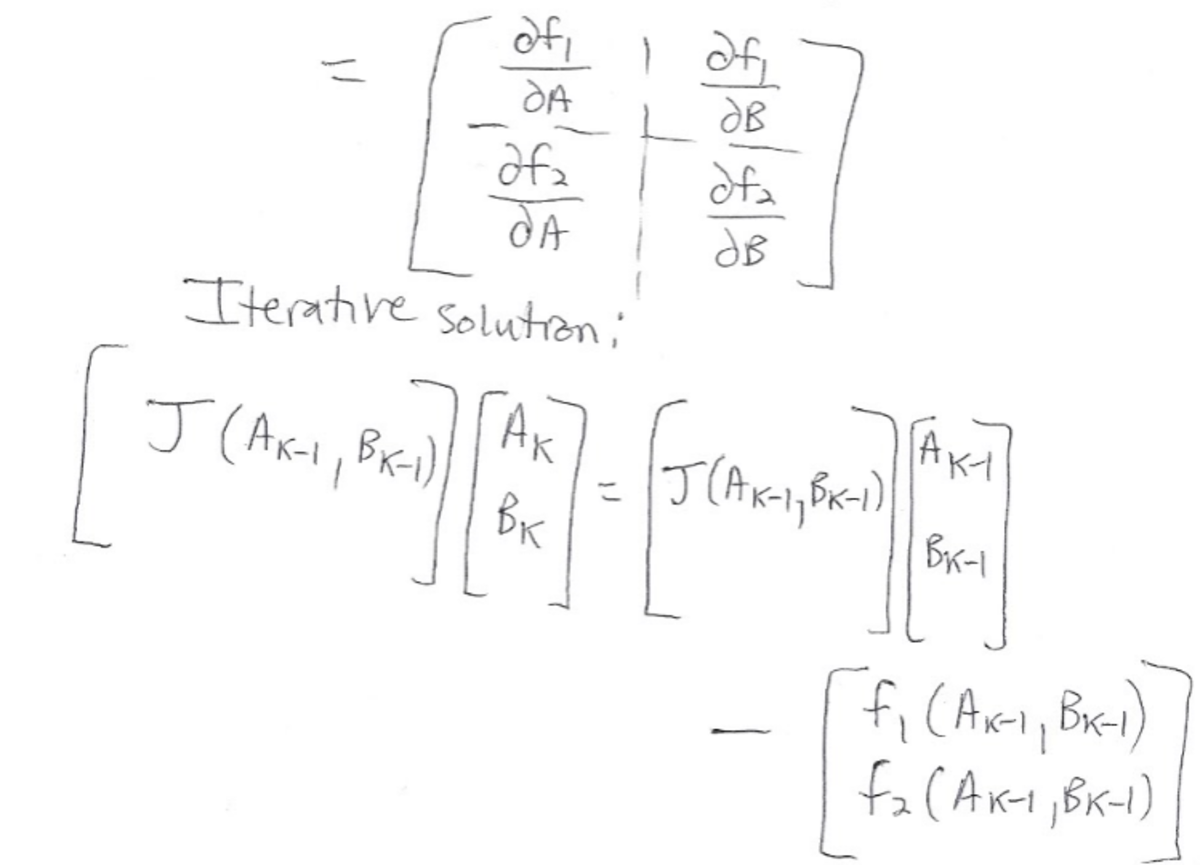

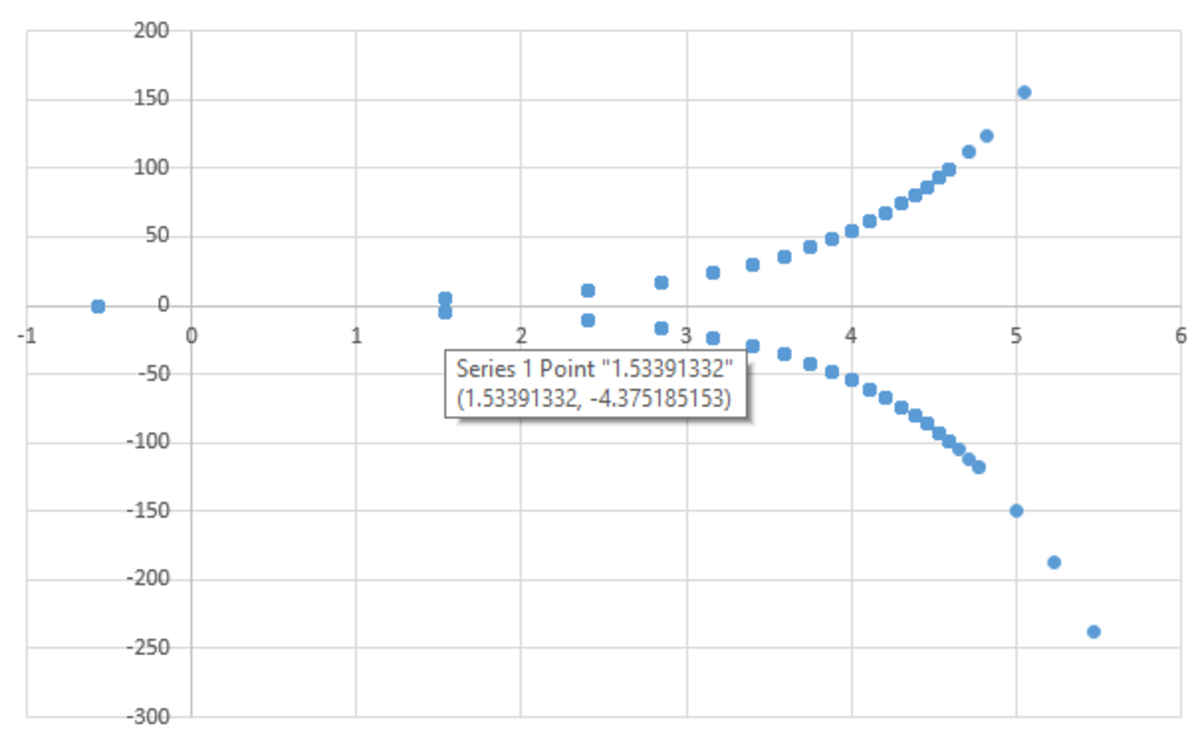

A good way to solve this is the multivariate Newton Raphson algorithm. Instead of taking a single derivative in one variable (see Newton Raphson page ), we use a matrix of partial derivatives, known as a Jacobian matrix. The analysis is shown below, as is a plot of some solutions in the complex plane. There is one real-valued solution, as well as a multitude of complex-valued solutions that are complex conjugates of each other. The complex solutions with the smallest value of A 2 + B 2 are 1.534 ± 4.375 i , which each have a magnitude of about 4.636 . The solutions were generated by running the Newton Raphson algorithm many times with different randomized ( A , B ) starting pairs. The particular random starting point determines which final solution the algorithm converges to. I have attached Python code for reference (this code can be put into a big loop and run many times to generate a plot). As a side note, hill-climbing algorithms and evolutionary algorithms are also good for solving these kinds of problems.

# Initial guesses

A = -100.0 + 200.0 * random.random()

B = -100.0 + 200.0 * random.random()

# Iterative solution

for j in range(0,100):

f1 = A + math.exp(A)*math.cos(B)

f2 = B + math.exp(A)*math.sin(B)

fvec = np.array([f1,f2])

J11 = 1.0 + math.exp(A) * math.cos(B)

J12 = -math.exp(A) * math.sin(B)

J21 = math.exp(A) * math.sin(B)

J22 = 1.0 + math.exp(A) * math.cos(B)

J = np.array([[J11,J12],[J21,J22]])

vec = np.array([A,B])

right = np.dot(J,vec) - fvec

Sol = np.linalg.solve(J, right)

A = Sol[0]

B = Sol[1]

f1 = A + math.exp(A)*math.cos(B)

f2 = B + math.exp(A)*math.sin(B)

res = math.fabs(f1) + math.fabs(f2)

if res <= 10.0**-4.0:

print A,B

Use LambertW function.

x + e x = 0 ⟹ 1 = ( − x ) e − x

Therefore, x = − W n ( 1 ) , n ∈ Z

The minimum magnitude non-real solution is x = W 1 ( 1 ) = 1 . 5 3 4 − 4 . 3 7 5 i