Stochastic processes sampler pack (I)

Here we will solve a few classic problems in probability using some exotic methods from non-equilibrium statistical mechanics. Though each of these problems can be addressed using more elementary methods, the tools and tricks we'll explore here are powerful, and can be used to great effect in solving more difficult kinds of questions in physics and probability.

What sets stochastic processes apart from the usual questions in probability is the central role of fluctuations. In stochastic settings, it is not enough to reason about the most likely outcome, as it is not necessarily representative of typical states of the system.

For instance, if we're interested in building a temperature control system for a trip to Mars, it isn't enough to know that the average temperature remains 70 deg F, as this could mean anything from an enjoyable, constant 70 deg F to a ship that switches once a day between 0 and 140 deg F, killing all of its passengers.

For stochastic problems, we'd like to know about all the states of the system and thus require the entire probability distribution.

The first problem that we'll talk about is known, in some circles, as Ehrenfest's urn. In this problem, there are two urns, one on the left (), and one on the right (), each of which can hold a total of balls. At each time step, there is some chance per ball of moving from the left urn to the right urn, and some chance of doing the reverse. Part of the inspiration for this model is the second law of thermodynamics which states roughly that any closed dynamical system will, in the long run, tend to states of highest disorder. If a system is currently in a highly ordered state, it should feel a pressure toward disorder upon rearrangements.

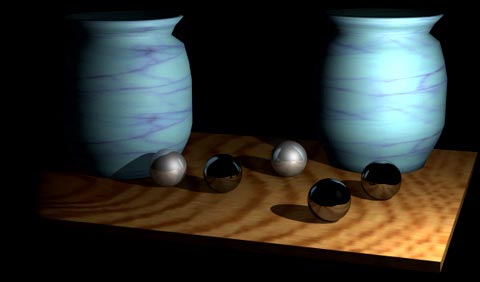

For example, consider a container of liquid after a partition is lifted between pure and dye-containing water:

Here, despite the lack of any identifiable driving force, the ordered (locally concentrated) dye molecules feel an overwhelming pressure to diffuse. An entropic force of sorts drives the system.

The balls in the urn are subject to the same considerations and can therefore bring out the entropic effect, as well as the dynamics of the system as it converges to "thermodynamic" equilbrium. The balls can be replaced with dye, heat, or genes, the effect still holds.

One question we can ask about the urns is what distribution the balls will have, on average, between the two urns. Some harder questions of interest are what dynamics the distribution will have on its way to steady state, and what statistics the steady state distribution of balls will exhibit.

Along the way we'll sketch some powerful results that allow us to make statements about uniqueness.

In the urn problem, we start out with balls in the left urn, and in the right. Each ball in the left urn has a chance per unit time of moving to the right. Likewise, each ball in the right urn has a chance of moving to the left.

After one time step, the number of balls in the left and right urns has changed to

\begin{alignat}{3} N_\text{L}(\Delta t) \quad & = N_\text{L}(0)\left(1-\alpha\right)\Delta t &&+\quad N_\text{R}(0)\beta \Delta t \\ N_\text{R}(\Delta t) \quad & = N_\text{L}(0)\alpha \Delta t &&+\quad N_\text{R}(0)\left(1-\beta\right)\Delta t \end{alignat}

This can be rewritten in matrix form:

For ease of notation, we write the state of the urns at time as the vector . We see that the matrix is the sum of and

Evidently, to move the system through time we multiply the state of the urn by the matrix .

Here, we have a choice to make. We can proceed in continous time (the limit ), or in discrete time steps where is finite. First, let's look at the continuous time case.

For simplicity, let us consider the case where .

Moving to the continous limit, we have .

First, we find :

and in the limit we have , which has the solution .

Let's unpack the exponential term.

If we write , we see that and so we write

This expansion might not seem to help our cause, however, we point out that

as do all even powers of . With that identity, we have

Now,

finally, we find the mean distribution between the urns with time:

As a sanity check, we see that yields the initial condition, as expected.

We can take the long time limit of this expression to find the steady state distribution. We obtain

This shows that the blending process erases all history of the initial distribution between the urns. Moreover, we see that the initial state flows to the steady state at twice the hopping rate .

At this point we might reflect on the road to solution. The matrix algebra is elegant and we obtain a clean solution for the problem. On the other hand, it is something of a lucky coincidence that our matrix can be written in terms of , where . Further, there are more complicated systems than the Ehrenfest urn.

What can we say we have learned about linear stochastic processes in general from working the urn problem using the matrix exponential? The answer is, unfortunately, not much. For instance, do linear stochastic systems always have a steady state or can they exhibit oscillations? Can they be multistable? Can the steady state depend on the intial condition? It would be useful to find a more insightful method of solution. We will make a start of this in the next section.

Before we begin, we note a difference between our work here and in the continuous case. In discrete time, the and are actual probabilities, whereas they were rates in the work above.

We'll pick up at the point where we'd established

We see that this equation implies a recursion . Therefore, we can obtain the dynamics of the average trajectory simply by raising to the power we desire. The trouble is that isn't diagonal, and, so, can result in unwieldy expansions upon raising powers. Luckily, most matrices can be transformed into diagonal representations.

The idea is that contrary to arbitrary matrices, diagonal matrices are easy to manipulate, therefore it would be preferable to work with them if possible. As diagonal matrices only have entries along the diagonal, any power of the matrix is given by raising each entry in the diagonal matrix to the desired power. So, if

then

Further, we can sandwich factors of between each of the without affecting the expression.

.

We hope to find such , and for such that . If we can, then most of the manipulation is carried out with a diagonal matrix, apart from the two factors of buttressing the .

As it turns out, such a is easy to find, and it is given by the matrix whose columns are the eigenvectors of . The diagonal entries of are the eigenvalues of . So long as has a linearly independent set of eigenvectors, a diagonal representation exists.

Let's find the eigenvalues, and eigenvectors of . What we'd like to find are two vectors which are left unchanged by apart from overall multiplication by a constant:

However, for non-zero vectors , has a non-zero solution if and only if the determinant of is zero: . We therefore solve :

From which we see that the two eigenvalues are and .

The eigenvectors corresponding to these values are

as we said, is given by a matrix whose columns are the eigenvectors of , hence

2x2 matrix inversion is simple and we have

then,

Because and (as and are probabilities), we have , and as . Therefore, at very long times, we can approximate as

and

We can see that the final state of the urns is, like before, independent of the initial conditions. The balls are split according to the relative magnitudes of the probabilities to jump from one urn to the other.

If instead we work at all time scales, keeping and we can get obtain dynamics of the system:

Depending on the particular values of the hopping probabilities, the solution can exhibit different qualitative behaviors. The eigenstate produces a constant urn distribution that the system approaches with time. The minor eigenstate, with eigenvalue is more interesting. For , the dynamics of the minor eigenstate is an exponential decay, and the system approaches the steady state monotonically. When however, the minor mode is an oscillation that decays more or less slowly. In some sense this should be obvious, because when , every ball in the system is always switching, though this kind of behavior might not be expected from such a simple system.

Let's reflect on the calculation and ask what lessons we can take from it. Though somewhat less elegant than the matrix algebra in the first calculation, things seem somehow more general. Our calculation didn't depend on clever tricks with and series manipulation. Almost all matrices are diagonalizable, and so, there is reason to hope that this approach will be more widely applicable.

Another key is that the large eigenvalue was 1, and the small eigenvalue had magnitude less than 1. This ensures that all valid initial conditions attract to .

It is reasonable to ask whether or not this is a coincidence. Can we always expect on the largest eigenvalue to be 1? Can we always expect the other eigenvalues to satisfy ?

Let's look at the form of

One thing that stands out is the sum of the columns: . This represents the conservation of probability. Every ball must up somewhere in the system, and, so, the columns must sum to one. This will always be true in a linear stochastic process, regardless of the details of the system. Does this imply anything about the eigenvalues?

Square matrices can have left , and right eigenvectors . However, for any square matrix, the set of eigenvalues for the left and right eigenvectors are the same. Therefore, let's try the vector with our transformation :

which shows that the eigenvalue of is 1. Note that this will be true for all matrices that describe linear stochastic processes. It is the conservation of probability that implies will be an eigenvalue for the system, and not any detailed considerations.

Note, however, that the right eigenvalue and the left eigenvalue for do not have equal entries. The right eigenstate of the system, that corresponds to , depends on the details of the transport between states (i.e. the rates between the urns).

Can we take this any further? Can we summarize the remaining eigenvalues for an arbitrary linear stochastic process? If all remaining eigenvalues have magnitude less than 1, we can be certain about of the uniqueness of steady state solutions.

To begin, no eigenvalue of the system can be greater than 1, if it were, we'd have implying that each entry in is greater than the corresponding entry in . However, every entry in the former is just a weighted average of the entries in the latter. It is then impossible for the maximum entry in to exceed the maximum entry in . Therefore, no eigenvalue of has magnitude greater than one.

While it is a bit harder to prove, it is true in general that there is only one eigenvector corresponding to the maximum eigenvalue (Perron-Frobenius theorem).

This is powerful. Any linear stochastic process, no matter how complicated, will tend toward a single steady state. In every linear stochastic process, any initial condition of a linear stochastic process will flow toward the same steady state. The blending will cleanse the system state of all remnants of non-steady state modes.

However, this result is also very boring. For instance, there is no chaos. There can be oscillations in the decay to equilibrium (i.e. some ), but all states will eventually converge on the steady state. What is required to have sustained oscillations in non-deterministic systems? Are such oscillations possible without an external driving force? What is required for bistability? Is it possible in closed systems? We'll address these questions in future notes.

Next time we'll again consider the urn, asking what are the statistics of the steady state, and how do fluctuations scale with large urn systems (many balls).

Easy Math Editor

This discussion board is a place to discuss our Daily Challenges and the math and science related to those challenges. Explanations are more than just a solution — they should explain the steps and thinking strategies that you used to obtain the solution. Comments should further the discussion of math and science.

When posting on Brilliant:

*italics*or_italics_**bold**or__bold__paragraph 1

paragraph 2

[example link](https://brilliant.org)> This is a quote# I indented these lines # 4 spaces, and now they show # up as a code block. print "hello world"\(...\)or\[...\]to ensure proper formatting.2 \times 32^{34}a_{i-1}\frac{2}{3}\sqrt{2}\sum_{i=1}^3\sin \theta\boxed{123}Comments

Very wll written. Keep it up! :)

nice piece of work...keep it up..