The Importance of Learning New Technology (Professional Programming Series Part 1)

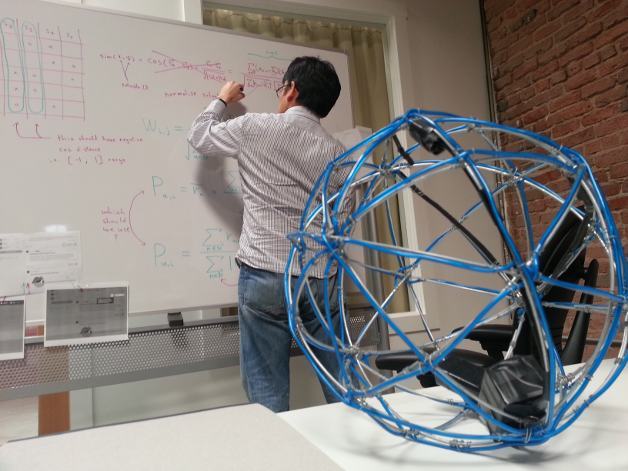

This is the first main post of the Brilliant Professional Programming Series. It was written by Kenji Ejima who does programming and infrastructure development at Brilliant (as seen at the whiteboard in the picture above).

Learning new technology is important to many professionals, but this is especially true for computer programmers. However, we don't actually talk about the specifics of learning new technologies on the job that often. Some of the topics that probably deserve more thought and discussion are:

- how much effort do engineers need to put into staying relevant,

- how to prioritize new technologies, and

- concerns about the momentum created by becoming a master of a specific technology.

I’ll try to address each of these topics below.

Technology Life Cycle

Think about a list of technologies that you have used in the past, but don’t use much any more. For example, these are some technologies that I no longer use, but used heavily for some period in the past:

- UNIX (Solaris, SunOS, OSF/1, HP-UX)

- Win32 API

- Borland C++ Builder

- MontaVista Linux

- subversion

- Adobe Flex

- Apache HTTP Server

Most of these technologies are still around, and none of them are dead or anything. However, some of them may be past their prime — the industry is slowly moving away from them. Even if your list is short now, as you become more experienced your list will almost certainly grow.

If you’re straight out of college, most of the technologies that you know and use are still relevant so you probably don’t think about this that much. You might just think “Well, if I don’t need to know the old stuff, and I just learned all the new stuff then I’m all set”, but it’s important to keep in mind: much of the technology you learned will go away at some point — probably soon.

Let’s see some examples.

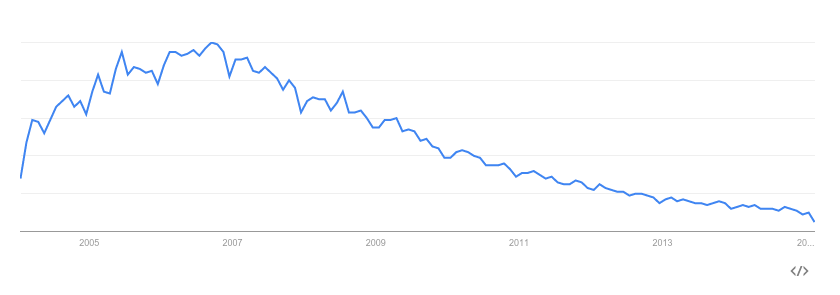

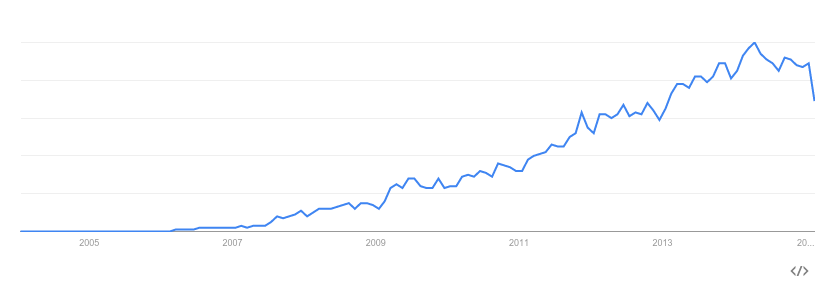

Google trend for “subversion”

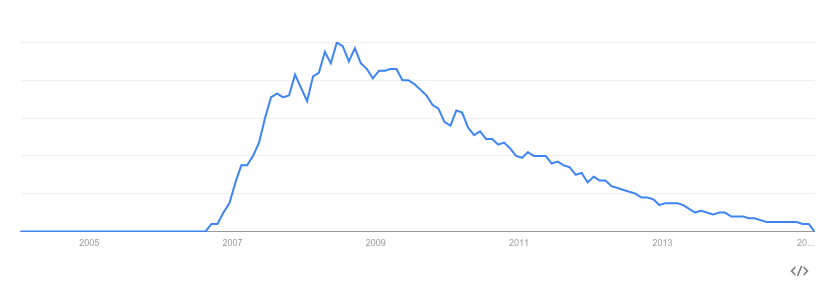

Google trend for “mootools”

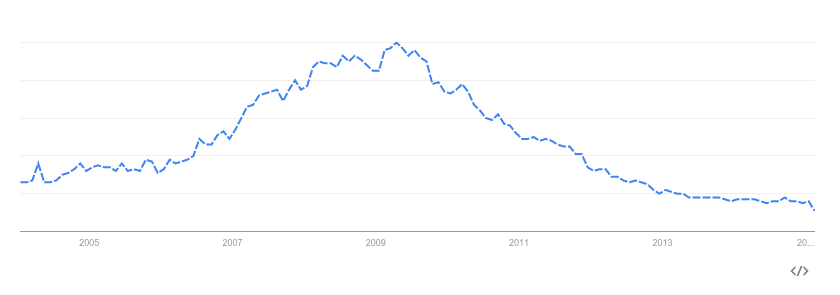

Google trend for “Adobe Flex”

Google trend for “Hadoop”

Google trend for “docker”

In general, I think it’s reasonable to assume that most technologies have a 7 year life cycle. I’m not considering the life cycle from the first release day to the day it gets discontinued, but rather the time that it has a reasonable amount of online information, popularity, and support. You may encounter a different number like 10 years or 5 years depending on your specialty, so for the following calculation go with the number that you think is reasonable:

Assuming most technologies have a 7 year life cycle, we must learn about 15% worth of our knowledge every year just to keep up. Doesn't that sound like a lot to you? It sounds like a lot of effort to me. If you’re learning less than that, you may want to consider if you should make a new investment in yourself by learning a new technology.

Technology Evaluation

Learning 15% worth of our knowledge every year is a pretty large investment to make, and we don’t want to make any bad decisions on what to learn. Evaluating the technology before jumping in is therefore an important step.

First, let’s go over the easy stuff. There are technologies that will most likely stay around for a long time. For example:

- UNIX command line

- SQL or RDBMS (relational database management system)

- Some programming languages

- Most programming concepts

The UNIX command line will probably remain very relevant for professional programmers for 20 to 30 years at least. The commands are not fancy, but each one is designed and implemented to do one thing very well. You can think of GUI tools as fancy cooking machines like bread makers and ice cream makers, whereas UNIX commands are the basic cooking tools like knives, frying pans, measuring cups, and so on. Just as professional chefs don’t frequently rely on those fancy machines over the basic cooking tools, programmers shouldn’t rely on fancy GUI tools over basic UNIX commands. I believe it is a good idea for us to master the UNIX command line.

SQL or RDBMS will stay around for a long time, too. The query language or interface may change but the concepts of behind relational databases, such as data normalization, analysis, and management will probably stay around forever.

Programming languages that become popular (c, java, javascript, php, python, etc) have a relatively long life cycle, but programming concepts have even longer life cycles. For example, object oriented programming, software architecture patterns such as MVC (model, view, control), layers, or pipes and filters, are concepts that will probably still be relevant for our whole career.

I think it is a good idea to prioritize these “low risk moderate return” technologies that will stay around for a long time.

After learning those, we will have to consider things that have a higher possibility of going away sooner, and therefore, we need to evaluate carefully before jumping on. Some points that I consider when evaluating technologies, are:

- trend … is it going up or down (see the charts above from Google Trends)

- competitor … if there is competition, then we will want to pick the more relevant option

- best practices … is the technology old enough to have best practices established

- relevance at work … is it something that will be professionally useful within a year

- lock-in … is it something that will be hard to move away from later, or is it a more open technology

Examples of competitors are PostgreSQL vs. MySQL, KDE vs.GNOME, Android vs. iOS. You don’t need to think about “which is better” because there probably isn’t a clear winner and loser. It’s more important to think about which will match your style and taste, or the style and taste of the companies you’d like to work for (for instance, if you like python/django, PostgreSQL would be a good choice because the django community seems to prefer PostgreSQL over MySQL).

Each technology will have its own best practices, and if you jump on before they are established, it is likely that you’ll have to discard some of your knowledge and effort.

It is not possible, and probably not a good idea either, to completely stay away from being locked in to certain technologies, but it is possible to control them. For example, if you are using a cloud service that offers a lot of proprietary features, you should consider how hard it would be to replicate those features on another service or implement them from scratch should you need to switch to a different service later.

Management of “Inefficiency”

Most of the "cost" of learning new technologies comes in the form of a hit to your productivity while you are starting to work with the new technology. I think of this in terms of efficiency. The task for the professional engineer, then, is management of this period of inefficiency when you are learning a new technology.

Let’s say you have 1 or 2 years of experience in C/C++. Is switching to Python or Haskell a big deal? Probably not.

What if you have 5 to 7 years of experience? Probably yes. You have pretty much all of the important standard libraries memorized. You know the idioms. You know all related developer tools. You can immediately tell if a variable should go in the stack, static, or heap memory. You have a weird function in your brain that tells you which variables haven’t been freed yet.

As you gain more and more experience in a certain technology, it becomes a bigger issue to switch to another technology. You’re not as experienced in the new technology, so your efficiency goes down. You don’t yet know all the standard libraries, and you don’t know all the idioms or related tools yet. So, you will feel: “I can get my work done much faster if I continue to use C++ instead of switching to this new language”.

We therefore tend to try to stay on the technology that we are efficient in. I won’t immediately say that’s a bad idea. But if we aren’t careful, one day we’re going to realize: “Hey, wait. Nobody is talking about COBOL any more…” (or Pascal or punch cards...)

Of course efficiency is important. We have to get stuff done. But we also have to understand that due to the evolving nature of the tech industry, we need to accept the inefficiency that comes with learning new technologies and manage it.

It’s probably not a good idea to say “Okay, I’m going to learn something new, so I’m not going to get much done for a whole month.” We should spread it out and manage the transition or learning curve, or assign time when we’re not extremely busy.

Trying to figure out which new technologies to invest in, and figuring out how to stay productive while learning them, will become more important as we grow as engineers. We have to solve both problems or accept that we will become obsolete long before we are ready to retire.

Easy Math Editor

This discussion board is a place to discuss our Daily Challenges and the math and science related to those challenges. Explanations are more than just a solution — they should explain the steps and thinking strategies that you used to obtain the solution. Comments should further the discussion of math and science.

When posting on Brilliant:

*italics*or_italics_**bold**or__bold__paragraph 1

paragraph 2

[example link](https://brilliant.org)> This is a quote# I indented these lines # 4 spaces, and now they show # up as a code block. print "hello world"\(...\)or\[...\]to ensure proper formatting.2 \times 32^{34}a_{i-1}\frac{2}{3}\sqrt{2}\sum_{i=1}^3\sin \theta\boxed{123}Comments

Wow! That's an amazing note. Definitely, inspires me to learn some new stuff and makes me realize, with what to proceed further and what to drop.

And I have a question - like you stated under "Management of Inefficiency" that it's rather a big deal to switch over if you already have enough experience with a particular language, so is it efficient, safe (and possible) to start learning all together, I mean learning multiple languages at the same time?

For example - as I told earlier that I look forward to learn Back-End development after my high school graduation, so is it possible to learn Back-End and at same time practice new and upcoming Front-End technologies.

Nevertheless, I learnt quite a few things from this note. Especially, that UNIX Command Line sounds cool. I hope I soon get my hands over it. ⌣¨

Thanks.

Log in to reply

Hi Kishlaya,

That is a good question.

Generally, it is a good idea to focus on less number of technologies at a time, ideally one at a time, especially in the early stages of learning.

In practical situations, we cannot provide professional quality output until we have a sufficient amount of knowledge or understanding of the technology. We want to get out of that completely unproductive stage as soon as possible. Once we are out of that stage, we will be inefficient but at least able to provide some output. Being inefficient but providing output is much better than not providing any. Learning multiple technologies at the same time will make us stay longer in the unproductive stage, and therefore, it is not ideal.

Learning new technologies have many sides. It is fun and exciting, but at the same time it is difficult or at least has some difficult sides. When we learn multiple technologies at the same time, there will be times where one is fun and the other isn't. What happens often is, we will proceed on the fun one, but stop learning the other. Once we stop for a certain amount of time at a "not fun or difficult" point, we don't come back to it. We want to avoid that situation by running through those points as fast as possible, so it's a good idea to focus on one technology at a time, so we won't get distractions.

Log in to reply

Hi Agnishom,

I think I was writing formulae and stuff on the white board to design the algorithm for the recommendation engine, which calculates the "try related problems" part that you see under a problem.

"Trolling Kenji" is what you see in Slack (our chat system) sometimes, and I think Silas Hundt added that start up message, but I have no idea why it was added.

Log in to reply

Wait, I think I got it. He added it to troll you!

Haha, you should ask him.

Nice post, by the way.

Nice note sir !!!

If I can ask this , can you tell me what is the "Friday Happy Hour" ? I'm sure that I had read it somewhere .

Calvin Edit: I have removed irrelevant comments from this thread, so that we can keep this on topic.

Log in to reply

Hi!

In the United States it's not uncommon for co-workers to hang out after work on Fridays for a bit of socializing. This period of time after work is traditionally called "happy hour" (even though it's often longer than an hour, and we also hope people are happy to work at Brilliant). Brilliant used to have the normal Friday happy hour but actually has shifted to having happy hour on Wednesdays so that more people are available every week (people often have other plans on Fridays).

Log in to reply

I see !! Thanks for replying sir :)

Is it possible to get a higher resolution of this image?

Log in to reply

Here it is