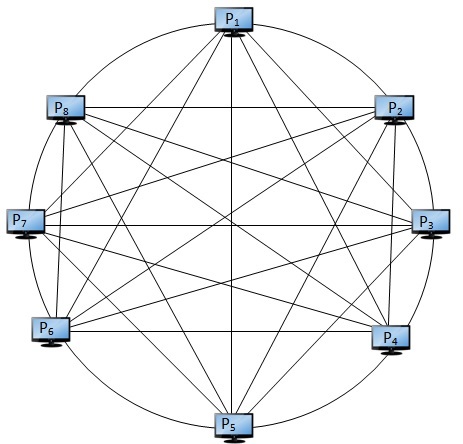

Broadcasting a message in a fully connected network

In a fully connected network with n nodes, the number of direct links between all pairs of computers is 2 n ( n − 1 ) . For n = 2 0 0 0 , find the minimum time required to broadcast a message stored in P 1 to all other computers in the network. Assume that

-

sending a message from a computer to another computer takes 1 0 − 6 sec;

-

any computer can send only one message to any other computer at a time.

Here is an example of fully connected computers with 8 computers.

This section requires Javascript.

You are seeing this because something didn't load right. We suggest you, (a) try

refreshing the page, (b) enabling javascript if it is disabled on your browser and,

finally, (c)

loading the

non-javascript version of this page

. We're sorry about the hassle.

2 solutions

Can you explain why this algorithm works, how you came up with it and why this gives the proposed time?

Log in to reply

This is an Order of l o g 2 ( n ) algorithm. If you trace the given algorithm you will get the following:

step1: P 1 sends message to P 2

step2: P 1 and P 2 send messages to P 3 and P 4

step 3 :

P

1

,

P

2

,

P

3

and

P

4

send messages to

P

5

,

P

6

,

P

7

and

P

8

...

With each step, the number of the computers is doubled or it goes

1 , 2 1 , 2 2 , 2 4 , … , 2 k where k = l o g 2 ( n )

Log in to reply

Thanks, that really helps. I have added that to the solution so that the solution is more clear to the reader

How can we show that this is indeed the optimal algorithm?

Log in to reply

Here we are dealing with a parallel algorithm where we are trying to make use all computers at the same time.

To solve this problem sequentially, it requires O(n) time. To satisfy the optimality when using parallel processing the cost of the algorithm must equal O(n).

Cost of parallel algorithm = runtime × no of computers = l o g 2 ( n ) ∗ n = n l o g 2 n > n

This algorithm is not optimal...

Log in to reply

I do not think we need to multiply with # of computers here. We are only asked to minimize the running time, right?

Log in to reply

@Agnishom Chattopadhyay – Yes, we are asking for minimum time. But the answer to your question about optimality. This is not optimal.

Log in to reply

@Ossama Ismail – What is your definition for optimality?

Log in to reply

@Agnishom Chattopadhyay – When using parallel computers or processor the definition of optimality is:

the best sequential time(one processor) = no. of processor x (parallel run time )

if you satisfy the above equation then your algorithm is optimal. If not then you must achieve speed up.

Here we achieved to speed up, because the run time is o(log(n)) which is better than O(n) in the case of using one processor to send the message to n processors.

We will get minimized time when all computers keep repeating the message to another which is still ignorant of it, once the message reach them. So the number of computers that would have gained the knowledge after each round of 'data-sharing' will double than the number before. Since 2000 is less or equal than 2^11 (more like 2^10 < 2000 <= 2^11), thus it will need 11 rounds of sharing / broadcasting, for a total time of 11x10^-6 s.

We will get minimized time when all computers keep repeating the message to another which is still ignorant of it, once the message reach them

Are you sure we can't do better than this? Why isn't there a better algorithm than this?

We will show that at each iteration, the number of computers in the network who have received the message can be doubled. To do this we do something like this:

step1: P 1 sends message to P 2

step2: P 1 and P 2 send messages to P 3 and P 4

step 3 : P 1 , P 2 , P 3 and P 4 send messages to P 5 , P 6 , P 7 and P 8

...

Because we're doubling the number of computers each time (except for maybe the last step), the number of steps is ⌈ lo g 2 n ⌉ .

So, to send out this message to 2000 computers it takes ⌈ lo g 2 2 0 0 0 ⌉ time units.

Formally, we could do this:

Computer P 1 sends the message to P 2

for i = 0 to (log n – 1) do

for j = 2 i +1 to 2 ( i + 1 ) do in parallel or simultaneously

P ( j − 2 1 ) sends message to P j

end for j

end for i