M Cauchy Présente

The integral ∫ 0 ∞ e cos x sin ( sin x ) x d x can be expressed as P 1 π Q ( R e − S ) where P , Q , R , S are positive integers where P , R and S are pairwise coprime.

Write the answer as the concatenation of P Q R S of the integers P , Q , R , S . For example, if you think that the integral is equal to 3 1 π 2 ( 4 e − 1 ) , give the answer 3241.

The answer is 2111.

This section requires Javascript.

You are seeing this because something didn't load right. We suggest you, (a) try

refreshing the page, (b) enabling javascript if it is disabled on your browser and,

finally, (c)

loading the

non-javascript version of this page

. We're sorry about the hassle.

5 solutions

Moderator note:

Good clear explanation of how to treat the integral using ideas in complex analysis.

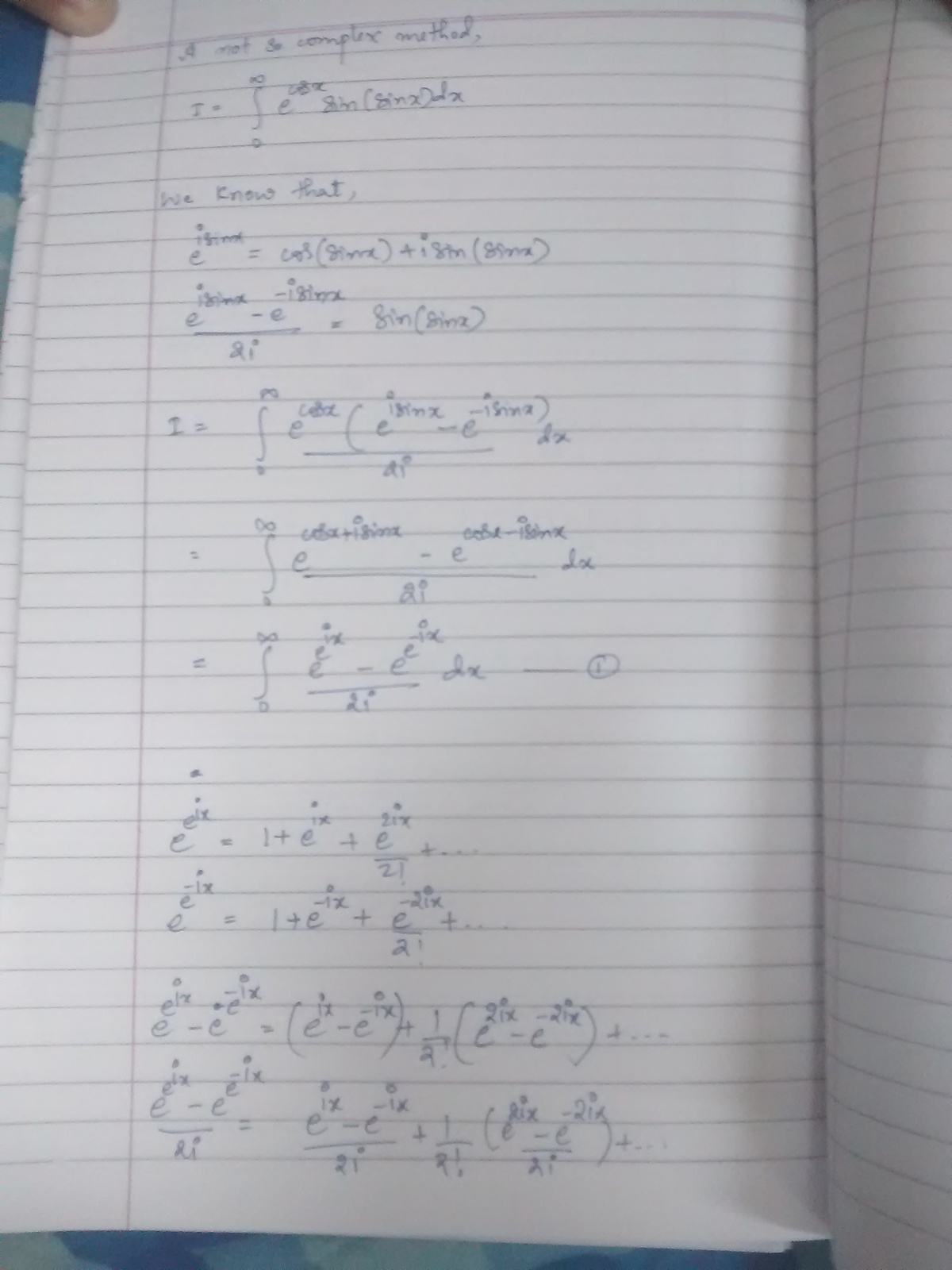

Alternative method [As of now, I still do not know how to solve the issue of convergence. See comments below]

I = ∫ 0 ∞ x e cos x sin ( sin ( x ) ) d x = ∫ 0 ∞ ℑ [ x e cos x e i sin x ] d x

= ∫ 0 ∞ ℑ [ x e cos x + i sin x ] d x = ∫ 0 ∞ ℑ [ x e e i x ] d x

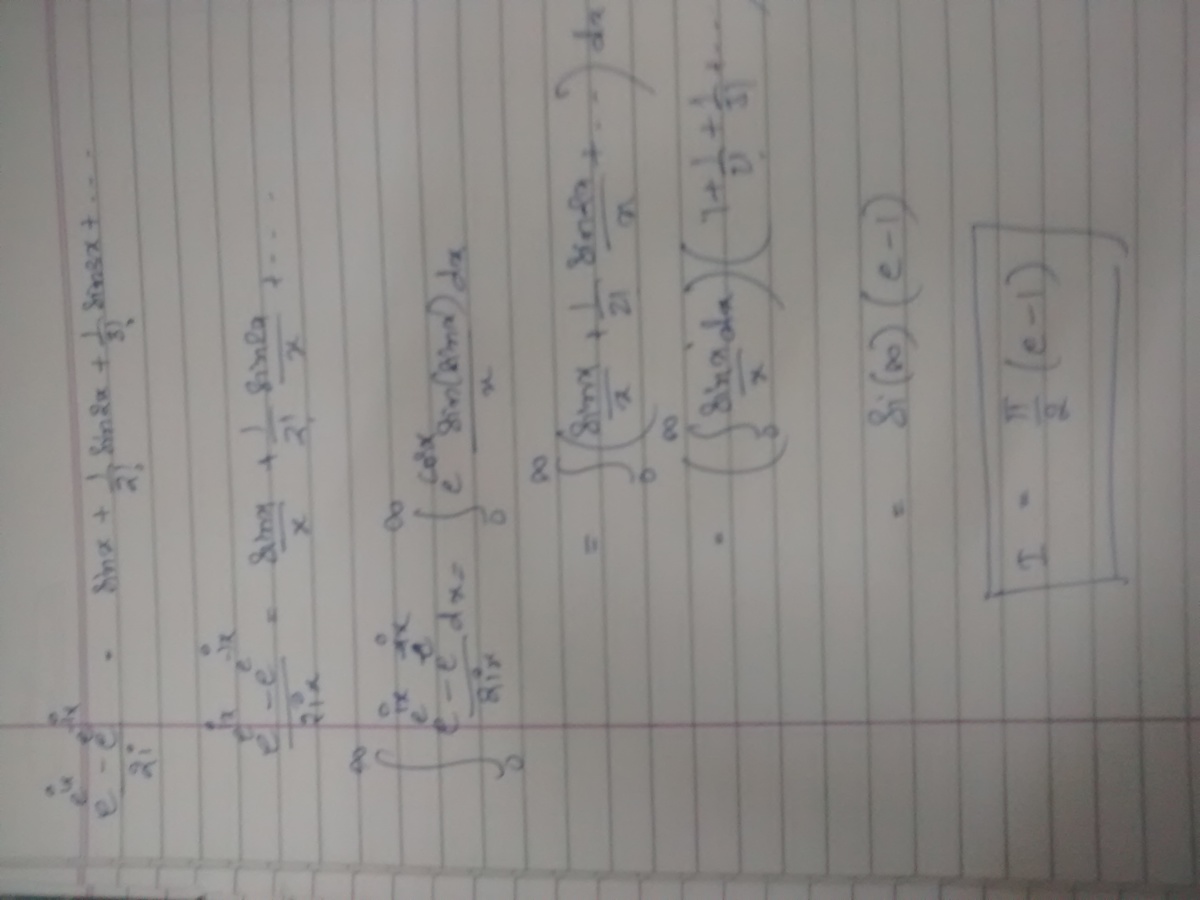

= ∫ 0 ∞ ℑ [ n = 0 ∑ ∞ n ! 1 ⋅ x e i x n ] d x = ∫ 0 ∞ n = 0 ∑ ∞ n ! 1 ⋅ x sin ( n x ) d x

= ∫ 0 ∞ n = 1 ∑ ∞ n ! 1 ⋅ x sin ( n x ) d x

Using ∫ 0 ∞ x sin n x d x = 2 π for n > 0 :

I = 2 π n = 1 ∑ ∞ n ! 1 = 2 π ( e − 1 )

Log in to reply

This one is not too hard to resolve. We have e cos x x sin ( sin x ) = I ( x e e i x ) = n = 1 ∑ ∞ n ! 1 x sin n x for all x ≥ 0 . Moreover, since ∣ ∣ ∣ ∣ n ! 1 x sin n x ∣ ∣ ∣ ∣ ≤ ( n − 1 ) ! 1 x ≥ 0 , n ≥ 1 and since ∑ n = 1 ∞ ( n − 1 ) ! 1 < ∞ , we deduce that the above series converges uniformly on ( 0 , ∞ ) . Thus we can reverse the order of integration and summation for a finite interval, and deduce that ∫ 0 X e cos x x sin ( sin x ) d x = n = 1 ∑ ∞ n ! 1 ∫ 0 X x sin n x d x = n = 1 ∑ ∞ n ! 1 ∫ 0 n X u sin u d u for any X > 0 , so that ∣ ∣ ∣ ∣ ∣ 2 1 π ( e − 1 ) − ∫ 0 X e cos x x sin ( sin x ) d x ∣ ∣ ∣ ∣ ∣ ≤ n = 1 ∑ ∞ n ! 1 ∣ ∣ ∣ ∣ ∣ 2 1 π − ∫ 0 n X u sin u d u ∣ ∣ ∣ ∣ ∣ for any X > 0 .

Given ϵ > 0 , we can find Ξ > 0 such that X > Ξ ⇒ ∣ ∣ ∣ ∣ ∣ 2 1 π − ∫ 0 X u sin u d u ∣ ∣ ∣ ∣ ∣ < ϵ and hence X > Ξ ⇒ ∣ ∣ ∣ ∣ ∣ 2 1 π ( e − 1 ) − ∫ 0 X e cos x x sin ( sin x ) d x ∣ ∣ ∣ ∣ ∣ ≤ n = 1 ∑ ∞ n ! 1 ϵ < e ϵ By what it means to be an infinite Riemann integral, this implies the result.

There are big convergence issues here. Sure, the integrand is given by a uniformly convergent series, but you still need to justify that the term-by-term integral of that series over the infinite interval ( 0 , ∞ ) converges to the integral of the integrand over that interval. You cannot apply either the Monotone Convergence Theorem or the Dominated Convergence Theorem in this case.

Log in to reply

Even I did it in this way (yeah, I know I'm technically wrong).

Could you give an example where interchanging the integral and summation gives different results?

Most of the questions on brilliant don't have this issue I guess. (Partly the reason why I don't bother checking much before interchanging.)

Log in to reply

@A Former Brilliant Member – I have shown an example for a sequence, not a series, but these are equivalent. Consider f n , defined on ( 0 , 1 ] by the formula f n ( x ) = ln ( n + 1 ) ( 1 + n x ) n 0 < x ≤ 1 for any positive integer n . Then ∫ 0 1 f n ( x ) d x = [ ln ( n + 1 ) ln ( 1 + n x ) ] 0 1 = 1 for all n ≥ 1 , and hence n → ∞ lim ∫ 0 1 f n ( x ) d x = 1 However, for any 0 < x ≤ 1 we have 0 ≤ f n ( x ) ≤ x ln ( n + 1 ) 1 n ≥ 1 and hence it is clear that n → ∞ lim f n ( x ) = 0 0 < x ≤ 1 Thus the sequence of functions f n converges everywhere to the function f ( x ) = 0 , and ∫ 0 1 f ( x ) d x = 0 Thus here is a case where:

- all the functions f n are integrable,

- the limit of the integrals ∫ 0 1 f n ( x ) d x as n → ∞ exists,

- the pointwise limit of the sequence of functions f n exists, and defines an integrable function f

but ∫ 0 1 f ( x ) d x = lim n → ∞ ∫ 0 1 f n ( x ) d x .

There are many other examples.

Interchanging limits is always a delicate operation. There are theorems which enable you to interchange a limit and an integral, but when the conditions for these theorems do not hold, as here, you have to be careful. What is tricky is that there are cases (as the one of this problem) where changing the order of the limit and the integral results in the correct answer, although that reversal of order is not justified by a theorem. Life, or mathematics, is like that!

Log in to reply

@Mark Hennings – Thanks for that example!

Have you come across any problem on brilliant where interchanging gives a different answer?

I'm still a beginner on this so mind me if I interpretated it wrongly. Is interchanging the sum and integral not justified? If so, would showing that

n = 1 ∑ ∞ ∫ 0 ∞ n ! 1 ⋅ x sin ( n x ) d x

converges solve the issue?

Log in to reply

@Julian Poon – It is a nontrivial matter to show that ∫ 0 ∞ n = 0 ∑ ∞ f n ( x ) d x = n = 0 ∑ ∞ ∫ 0 ∞ f n ( x ) d x even if all the integrals on the RHS exist. The sum on the RHS may fail to converge, or may converge, but not to the integral on the LHS. Even if the sum of integrals on the RHS exists, the integral on the LHS may not exist.

There are two general theorems that make this result true. The Monotone Convergence Theorem tells us that all is well if all the functions f n are positive, and the Dominated Convergence Theorem tells us that all is well if we can find an integrable function g such that ∣ ∣ ∣ ∣ ∣ n = 0 ∑ N f n ( x ) ∣ ∣ ∣ ∣ ∣ ≤ g ( x ) ∀ x , N Neither of these conditions are satisfied in this case, so trying to handle this integral via an infinite series is problematic.

one more question on contours... ;) it was nice

Let ∫ C f ( z ) d z = ∫ C z e e i z d z

where

C

is the contour as shown in figure

Using the Cauchy's theorem :

r ∫ R f ( x ) d x + ∫ γ f ( z ) d z + − R ∫ − r f ( x ) d x + ∫ Γ f ( z ) d z = 0

Now, consider ∫ Γ f ( z ) d z = 0 ∫ π R e i θ e e i R e i θ R i e i θ d θ

As R → ∞

0 ∫ π i e e i R e i θ d θ → i π

Similarly, as r → 0

− π ∫ 0 i e e i r e i θ d θ → − e i π

Substituting these back into Cauchy's theorem, we get

− ∞ ∫ ∞ f ( x ) d x = i π ( e − 1 )

or 0 ∫ ∞ x e e i x d x = i 2 π ( e − 1 )

On expanding left hand side , and comparing imaginary parts, we can easily get

0 ∫ ∞ x e cos x sin ( sin x ) d x = 2 π ( e − 1 )

Hence , the answer is 2 1 1 1

That the limit of the integral on the radius R semicircle converges to i π is not at all obvious - controlling that integral was the largest part of my proof - perhaps some details are necessary...

Log in to reply

okay... let me edit again ... i want to save my time in typing :D

but let me tell here... i used,.. modulus concept to converge it.... i find it very simpple thats why i did not add while typing... i will add by night..

Log in to reply

Your integrand is my f ( z ) + z 1 . The integral of z 1 along the contour gives π i , and the integral of f ( z ) along the contour tends to zero, as I proved. The argument is easy, but perhaps not obvious to a general reader, without at least a hint!

A solution using the "Feynmann trick":

∀ a > 0 , let us define the real valued function f ( a ) = ∫ 0 ∞ e − a x e cos x x sin ( sin x ) d x Then we can write d a d f = − ∫ 0 ∞ e − a x e cos x sin ( sin x ) d x Now note that we can write, − d a d f ( a ) = ℑ ( ∫ 0 ∞ e ( − a x + e i k x ) d x ) = ℑ ( g ( a ) ) Furthermore, g ( a ) = = ∫ 0 ∞ k ≥ 0 ∑ k ! e ( − a + i k ) x d x k ≥ 0 ∑ k ! 1 ∫ 0 ∞ e ( − a + i k ) x The second step can be justified using Fubini's theorem . Now, note that h ( a ) : = ∫ 0 ∞ e ( − a + i k ) x d x = a − i k 1 which follows since the integral converges for a > 0 . Then, it follows, after some algebraic manipulation, and an application of the Monotone Convergence Theorem − d a d f ( a ) = ⟹ f ( a ) = k ≥ 0 ∑ k ! ( ( k + 1 ) 2 + a 2 ) 1 − k ≥ 0 ∑ ( k + 1 ) ! 1 arctan ( k + 1 a ) + C where C is the integration constant. Now note that lim a → ∞ f ( a ) = 0 = − 2 π ( e − 1 ) + C ⟹ C = 2 π ( e − 1 ) . Finally, note that f ( a ) is a monotonically decreasing function of a and is lower bounded by the desired expression. Thus using Bounded Convergence Theorem , we get the evaluation of the expression as 2 π ( e − 1 ) , which yields the answer 2 1 1 1 .

I am afraid there are big problems with this. The first that I can see comes from your introduction of the "function(s)" g ( a ) and h ( a ) , where you write: g ( a ) = ∫ 0 ∞ x e − a x + e i k x d x h ( a ) = ∫ 0 ∞ x e − − a x + i k x d x None of these integrals exist, since their integrands are all O ( x − 1 ) near x = 0 . Given the nonexistence of the constituent integrals, you cannot appeal to Fubini's Theorem.

As a minor point, your definition of f omits a factor of x − 1 .

Log in to reply

@Mark Hennings , thanks for pointing out those major defects in the proof, for which I should have been more careful. I have modified the proof a bit hoping to make it error-free. I hope the subtle points are also taken care of. Please let me know if any point still needs to be given special care.

Log in to reply

That's much better. This is now a good proof. (+1)

Log in to reply

@Mark Hennings – @Mark Hennings Thanks.

This is a good go. You need to look at my discussion with Julian Poon, who came up with much the same solution, since your argument needs work to get precise.

Maybe the best way of solving this integral is by complex analysis; I chose the elementary method: Let:

I = ∫ 0 ∞ e cos x sin ( sin x ) x d x

Recalling the definitions and properties of hyperbolic sine and hyperbolic cosine :

cosh x + sinh x = e x = > cosh ( cos x ) + sinh ( cos x ) = e cos x

Therefore:

I = ∫ 0 ∞ cosh ( cos x ) sin ( sin x ) x d x + ∫ 0 ∞ sinh ( cos x ) sin ( sin x ) x d x

Now consider ( For real numbers a and b ) :

sin ( a + i b ) = sin a cos i b + cos a sin i b = sin ( a ) cosh ( b ) + i cos ( a ) sinh ( b )

cos ( a + i b ) = cos a cos i b − sin a sin i b = cos ( a ) cosh ( b ) − i sin ( a ) sinh ( b )

Therefore:

sin ( a ) cosh ( b ) = ℜ sin ( a + i b ) and sin ( a ) sinh ( b ) = − ℑ cos ( a + i b )

Hence :

sin ( sin x ) cosh ( cos x ) = ℜ sin ( sin x + i cos x ) = ℜ sin ( i e − i x )

And,

sin ( sin x ) sinh ( cos x ) = ℑ cos ( sin x + i cos x ) = ℑ cos ( i e − i x )

Now substitute these in the expression of I :

I = ℜ ∫ 0 ∞ sin ( i e − i x ) x d x + ℑ ∫ 0 ∞ cos ( i e − i x ) x d x

Using Taylor series expansion of the sine and the cosine functions:

I = ℜ ∫ 0 ∞ n = 0 ∑ ∞ ( − 1 ) n ( 2 n + 1 ) ! ( i e − i x ) 2 n + 1 x d x + ℑ ∫ 0 ∞ m = 0 ∑ ∞ ( − 1 ) m ( 2 m ) ! ( i e − i x ) 2 m x d x

Note that i 2 m = ( − 1 ) m and i = e i 2 π :

I = ℜ ∫ 0 ∞ n = 0 ∑ ∞ ( 2 n + 1 ) ! e i ( 2 π − x ( 2 n + 1 ) ) x d x + ℑ ∫ 0 ∞ m = 0 ∑ ∞ ( 2 m ) ! e − i x ( 2 m ) x d x

taking the real and the imaginary parts :

I = ∫ 0 ∞ n = 0 ∑ ∞ ( 2 n + 1 ) ! sin ( ( 2 n + 1 ) x ) x d x + ∫ 0 ∞ m = 0 ∑ ∞ ( 2 m ) ! sin ( 2 m x ) x d x

Now using Fubini's Theorem:

I = n = 0 ∑ ∞ ( 2 n + 1 ) ! 1 ∫ 0 ∞ x sin ( ( 2 n + 1 ) x ) d x + m = 0 ∑ ∞ ( 2 m ) ! 1 ∫ 0 ∞ x sin ( 2 m x ) d x

And use the well-known Dirichlet Integral :

I = n = 0 ∑ ∞ ( 2 n + 1 ) ! 1 ( 2 π ) + m = 1 ∑ ∞ ( 2 m ) ! 1 ( 2 π )

Note that we omitted the m = 0 term of the second summation , because the term will disappear before performing the Dirichlet Integral. Now it is only the Taylor series of hyperbolic sine and hyperbolic cosine:

I = 2 π ( sinh ( 1 ) + cosh ( 1 ) − 1 ) = 2 1 π 1 ( 1 e − 1 )

Before you can use Fubini's Theorem to reverse the order of summation and integration, you need to know that the integrand is doubly Lebesgue integrable. It is not. We are talking about infinite Riemann integral here, and Fubini's Theorem does not apply. Your argument is formally interesting, but needs work to make it precise.

In essence, what you are doing is like what Julian Poon did, and his and my discussion showed how his approach could be made to work.

Log in to reply

Oh I didn't see the comments below your solution. But still I don't know the exact condition for which one can interchange the sum and integral. Can you tell or explain a little more?

For any 0 < ϵ < 1 < R , let Γ ϵ , R be the contour Γ ϵ , R = γ 1 + γ 2 − γ 3 − γ 4 where

Since the function f ( z ) = z e e i z − 1 is analytic on the punctured disk C \ { 0 } , we deduce that ∫ Γ ϵ , R f ( z ) d z = 0 for all 0 < ϵ < 1 < R . Now ∫ γ 1 f ( z ) d z = ∫ ϵ R x e e i x − 1 d x ∫ γ 3 f ( z ) d z = ∫ ϵ R x e e − i x − 1 d x and hence ( ∫ γ 1 − ∫ γ 3 ) f ( z ) d z = ∫ ϵ R x e e i x − e e − i x d x = 2 i ∫ ϵ R e cos x sin ( sin x ) x d x Since f ( z ) has a simple pole at z = 0 , we deduce that ϵ → 0 lim ∫ γ 4 f ( z ) d z = π i R e s z = 0 f ( z ) = π i ( e − 1 ) and so we deduce, letting ϵ → 0 , that 2 i ∫ 0 R e cos x sin ( sin x ) x d x + ∫ γ 2 f ( z ) d z − π i ( e − 1 ) = 0 If z ∈ γ 2 and w = e i z , then z = R e i θ for 0 ≤ θ ≤ π , and so ∣ w ∣ = e − R sin θ . Thus ∣ f ( z ) ∣ ≤ ∣ ∣ ∣ ∣ z e w − 1 ∣ ∣ ∣ ∣ = R 1 ∣ ∣ ∣ ∣ ∣ n = 1 ∑ ∞ n ! w n ∣ ∣ ∣ ∣ ∣ ≤ R 1 n = 1 ∑ ∞ n ! ∣ w ∣ n ≤ R ∣ w ∣ n = 1 ∑ ∞ n ! 1 ≤ R e e − R sin θ so that ∣ ∣ ∣ ∣ ∫ γ 2 f ( z ) d z ∣ ∣ ∣ ∣ ≤ e ∫ 0 π e − R sin θ d θ = 2 e ∫ 0 2 1 π e − R sin θ d θ Jordan's Inequality tells us that sin θ ≥ π 2 θ for 0 ≤ θ ≤ 2 1 π , so it follows that ∫ γ 2 f ( z ) d z = O ( R − 1 ) R → ∞ which implies, letting R → ∞ , that ∫ 0 ∞ e cos x sin ( sin x ) x d x = 2 1 π ( e − 1 ) so that P = 2 , Q = 1 , R = 1 and S = 1 , making the answer 2 1 1 1 .