Methane In R 4 ?

Consider five nonzero vectors in R 4 such that any two of them enclose the same nonzero angle θ . Find cos θ , to two significant digits.

Bonus Question : Find the corresponding angle θ n between n + 1 nonzero vectors in R n and find n → ∞ lim θ n .

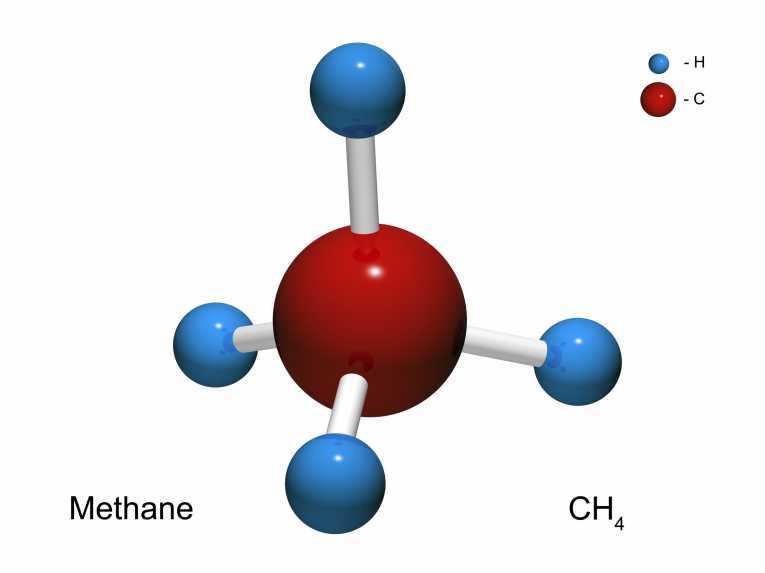

Note that θ 3 is the bond angle in the methane molecule.

Image Credit: C.A.G.E

The answer is -0.25.

This section requires Javascript.

You are seeing this because something didn't load right. We suggest you, (a) try

refreshing the page, (b) enabling javascript if it is disabled on your browser and,

finally, (c)

loading the

non-javascript version of this page

. We're sorry about the hassle.

5 solutions

Yes, this looks like a solid solution (+1)

Here is the proof of the affine independence of { u i } . For i = 1 , 2 , … , n , define the vectors v i = u i − u n + 1 . Then the ( i , j ) th coordinate of the Gramian Matrix G of the vectors { v i } can be calculated to be 2 − 2 cos ( θ n ) if i = j and 1 − cos ( θ n ) if i = j . Thus we can write G = ( 1 − cos ( θ n ) ) ( J + I ) where J and I are n × n all-one and identity matrices respectively. Avoiding the trivial case θ n = 0 according to the problem-statement, we see that G is full rank (in fact, positive definite) and consequently the vectors { u i , i = 1 , 2 , … , n + 1 } are affinely independent.

Log in to reply

How do you know that G is positive definite?

Log in to reply

The matrix G is a sum of a PSD and a PD matrix, and hence it is PD.

Log in to reply

@Abhishek Sinha – I suspect that most of our young comrades on Brilliant are not very familiar with these terms; how do you convince them that your J is positive semidefinite?

Log in to reply

@Otto Bretscher – Just compute the quadratic form x T J x = ( ∑ i x i ) 2 .

My solution is inductive in the number of dimensions n . It is a more general solution than others have given, because it assumes no more than a sequence of nested inner product spaces of increasing dimension.

Suppose that in n -dimensional space we have n + 1 vectors, u 0 , … u n such that { u i 2 = 1 u i ⋅ u j = − k for all 0 ≤ i = j ≤ n and some value k = − cos θ n . (Note: u i ⋅ u j is the inner product and u i 2 = ∣ u i ∣ 2 = u i ⋅ u i is the corresponding square norm.)

Now we go to n + 1 -dimensional space, which contains our original space as a subspace (preserving its inner product); let z be a unit vector in the new space orthogonal to the original space. Define { v i = α u i + β z v n + 1 = − z 0 ≤ i ≤ n Here, α and β are parameters to determined, in such a way that the v i obey the same relations as the u i (see above), but with a different value for k . It is sufficient to require that { v i 2 = 1 v i ⋅ v n + 1 = v i ⋅ v j = − k ′ for some 0 ≤ i = j ≤ n .

The first condition translates into α 2 + β 2 = 1 ; the second condition gives ( α u i + β z ) ⋅ ( − z ) = ( α u i + β z ) ⋅ ( α u j + β z ) − β = − α 2 k + β 2 . ( 1 + k ) β 2 + β − k = 0 β = − 1 or 1 + k k . The first solution would make all vectors v i equal to z , which all make the same angle of 0 ∘ with each other; this is not the solution we are looking for. Thus we go with the second solution and have k ′ = β = 1 + k k ; α = 1 + k 1 .

Now we have a way to "upgrade" an n -dimensional solution to an n + 1 -dimensional solution, and a way to see how k = − cos θ n behaves under this transformation.

-

For the basic case n = 1 , the only possible non-zero angle between vectors is 1 8 0 ∘ , with k = 1 .

-

For n = 2 we get k = 1 / 2 corresponding to a 1 2 0 ∘ angle.

-

For n = 3 we get k = 1 / 3 corresponding to a 1 0 9 . 5 ∘ angle.

-

It is not difficult to show that in general k = 1 / n . As n → ∞ , k → 0 , so that the angle approaches 9 0 ∘ from above.

The solution to the problem, for four dimensions, is cos θ 4 = − k = − 4 1 or 0 . 2 5 .

Why is this solution "more general" than the previous solutions ? In other words, what are you generalizing upon ? The previous two solutions hold in any inner-product space with appropriate dimensions.

Log in to reply

My solution is coordinate-free. In fact, my " n + 1 dimensional space" may be any space big enough to contain both the original n -dimensional space and a non-trivial vector that is orthogonal to it.

I now realize that my algebra relies on the fact that α , β are real numbers, so that my solution generally works for real inner product spaces.

Of course it can be proven that any finite-dimensional spaces of this kind can be given an orthogonal basis compatible with the sequence of nested subspaces etc. etc. so that one can define a coordinate system; but my problem does not refer to any such coordinate system or basis vectors.

Log in to reply

In my solution too, no such explicit basis vectors are invoked. But I don't understand how it "generalizes" the problem. Because any n -dimensional inner-product space over R is isomorphic to R n .

Log in to reply

@Abhishek Sinha – Sure. I just don't need that (rather profound!) fact :)

Log in to reply

@Arjen Vreugdenhil – I think this is a difference in terminology only. My solution is just as valid if I start with "Let u 1 , . . , u n + 1 be unit vectors in an n -dimensional real inner product space" , and likewise for Abhishek's solution.

Yes, this is a nice constructive solution! (+1) One can see what is happening: We take an equilateral triangle in the plane, for example, and place it into three-space, making it the base of a regular tetrahedron etc...

Small typos (just to show that I read your solution): ( 1 + k ) β 2 + β − k = 0 and β = − 1

While I would not call your solution "more general", it does have the advantage of proving the existence of a solution, while the other two solutions do not.

All I can say in defense of my solution is that it is brief and elegant ;)

An elementary proof by finding a recursion on θ n .

Let θ n denotes the desired angle associated with the n + 1 unit vectors, { x 1 , ⋯ , x n + 1 } living in R n , as mentioned in the question. W.l.o.g. we can assume that x 1 = [ 1 , 0 , ⋯ , 0 ] . This forces the other vectors to have the following form: x k + 1 = [ cos θ n x k ( n − 1 ) ] , k = 1 , 2 , ⋯ , n Consequently, we have a set of n vectors { x 1 ( n − 1 ) , ⋯ , x n ( n − 1 ) } , such that ⟨ x i ( n − 1 ) , x j ( n − 1 ) ⟩ = cos θ n − cos 2 θ n , ∀ i = j . However, the vectors x i ( n − 1 ) , thus obtained, are not normalized. By dividing them with their norm (which is ∣ sin θ n ∣ as per the construction), we get the new set of n unit vectors { x 1 ( n − 1 ) , ⋯ , x n ( n − 1 ) } with the same angle θ n − 1 enclosed between any two of them where cos θ n − 1 = 1 − cos 2 θ n cos θ n − cos 2 θ n ⟹ cos θ n − 1 = 1 + cos θ n cos θ n since cos θ n = 0 . Rearranging, we get the recursion, sec θ n = sec θ n − 1 − 1 , n ≥ 1 Finally, noting that for n = 1 , θ 1 = π , as per definition, we get, cos θ n = − 1 / n .

Hence, the desired answer is cos θ 4 = − 0 . 2 5 and letting n → ∞ , θ n → π / 2

Looking at the other solutions here, I'm feeling a little (a lot) overwhelmed. I solved this in a slightly different way(might be equivalent to @Abhishek Sinha 's solution - I'm not sure if the solution is correct, so please tell me if anything's wrong. Let's go.

Let's make the

n

+

1

vectors have the same magnitude of 1, with no loss of generality. This doesn't change the angle between any two vectors, which is still

cos

(

θ

n

)

.

I claim that the sum of these vectors must be a zero vector, because of the symmetry of the construction means the vector cannot "point" in a specific direction. (If someone can provide a rigorous proof, I'll be happy to include it.)

Okay, so let's take the entire construction, and rotate it so that one of the vectors lies along one of the n dimensional axes. Let's name this vector

i

1

^

because it's neat.

Now, let's name the rest of the vectors too, keeping

i

1

^

,

i

1

^

,

.

.

.

,

i

n

^

as the unit vectors of our space, as: (

k

is not the letter, it's the component of the vector along the

n

t

h

dimension.)

v

1

=

a

1

i

1

^

+

b

1

i

2

^

+

.

.

.

+

k

1

i

n

^

v

2

=

a

2

i

1

^

+

b

2

i

2

^

+

.

.

.

+

k

2

i

n

^

.

.

.

v

n

=

a

n

i

1

^

+

b

n

i

2

^

+

.

.

.

+

k

n

i

n

^

Note that

i

j

⋅

i

k

=

0

for

j

=

k

, where

⋅

is the dot product. This is because they are perpendicular in the n-dimensional space. (Rigorous proof encouraged.)

But, by the definition of the dot product,

a

⋅

b

=

∣

a

∣

∣

b

∣

cos

(

θ

)

, where

θ

is the angle between

b

and

a

.

Back to the question now, we have, taking dot products of the vectors with

i

1

^

,

cos

(

θ

n

)

=

∣

i

1

^

∣

∣

v

1

∣

i

1

^

⋅

v

1

=

.

.

.

=

∣

i

1

^

∣

∣

v

n

∣

i

1

^

⋅

v

n

∣ i 1 ^ ∣ = ∣ v 1 ∣ = . . . = ∣ v n ∣ = 1 ⇒ cos ( θ n ) = a 1 = a 2 = . . . = a n

But

i

1

^

+

a

1

i

1

^

+

.

.

.

+

a

n

i

1

^

=

(

1

+

a

1

+

.

.

.

+

a

n

)

i

1

^

=

0

from the zero vector condition above (along one dimension).

This means,

n

cos

(

θ

n

)

+

1

=

0

⇒

cos

(

θ

n

)

=

n

−

1

Here,

n

=

4

⇒

cos

(

θ

n

)

=

−

0

.

2

5

.

That's it.

As you point out yourself, there is a gap in your solution: You assume that the sum of the vectors is 0. To use Abhishek's terms: You assume that the center of gravity, g is 0. I don't see a direct way to justify that assumption. Abhishek skillfully works around the issue with his affine argument, while I avoid the issue altogether.

Log in to reply

But what's wrong with the symmetry argument? I'm just curious about why it's wrong.

Log in to reply

You need to make the argument more precise. For example, if you take three vectors in R 3 such that any two of them enclose the same angle (a right angle, for example), their sum may not be 0 (but you have "symmentry" too).

Log in to reply

@Otto Bretscher – But it's not possible for 4 vectors in R 3 , right? I think the jump goes from n to n + 1 in R n , which has to do with the linear dependence of the vectors.

Log in to reply

@Ameya Daigavane – Yes, exactly, but you cannot prove it by appealing to symmetry alone. With 5 vectors in 4-space, it is clear that they are linearly dependent, but it is not clear that they are dependent in this special way that their sum is 0.

Log in to reply

@Otto Bretscher – Got it. But it does seem like there does exist a configuration of n + 1 vectors in R n such that their sum is zero, and the angle between each is the same. Could a constructive argument work then?

Log in to reply

@Ameya Daigavane – @Ameya Daigavane : Once we know that cos ( θ n ) = − n 1 , then it is easy to show that the sum of the unit vectors must be zero since ( v 0 + v 1 + . . . + v n ) ⋅ ( v 0 + v 1 + . . . + v n ) = ( n + 1 ) − n ( n + 1 ) cos ( θ n ) = 0 .

Log in to reply

@Otto Bretscher – Of course, I got that. I was wondering if you could prove that without using the result we have to prove, that is cos θ n = n − 1 .

@Ameya Daigavane – Take a loot at this one ... it may clarify the issues

The solution I had in mind is similar to that of Abhishek. I assume that the reader has taken a first course in Linear Algebra. If you have not yet done so, you should! ;)

Let u 1 , . . . , u n + 1 be unit vectors in R n with u i ⋅ u j = cos ( θ n ) , for i = j . Let G = ( u i ⋅ u j ) i j be the associated Gram matrix, which is singular and positive semidefinite, so that the smallest eigenvalue of G is 0. Note that the diagonal entries of G are 1, while all other entries are cos ( θ n ) . As we discussed here , the eigenvalues of G will be 1 − cos ( θ n ) > 0 and n cos ( θ n ) + 1 = 0 , so that cos ( θ n ) = − n 1 .

In particular, cos ( θ 4 ) = − 0 . 2 5

FYI If θ n exists, then it is equal to the value calculated. However, (if I recall correctly) such vectors need not exist for all n .

Log in to reply

@Calvin Lin : I believe they do exist, but I may be wrong. Consider the ( n + 1 ) × ( n + 1 ) matrix A with 1's on the diagonal and − n 1 elsewhere, a singular positive semidefinite matrix. Now we have a Cholesky decomposition A = L L T , and the rows of L will have the property we seek (since they are linearly dependent, they live in an n -dimensional space).

The Cholesky decomposition is essentially what @Arjen Vreugdenhil does, from first principles.

Denote n + 1 non-zero unit vectors in R n by { u i , i = 1 , 2 , … , n + 1 } . Define the center of gravity of these vectors by g = n + 1 1 i = 1 ∑ n + 1 u i If the common cosines between the vectors { u i } be denoted by cos ( θ n ) , then for all i = 1 , 2 , … , n + 1 we have u i . g = n + 1 1 ( 1 + n cos ( θ n ) ) Since the vectors { u i , i = 1 , 2 , … , n + 1 } lives in an n -dimensional space, they must be linearly dependent . Thus there exists scalars λ i , not all-zero, such that i = 1 ∑ n + 1 λ i u i = 0 Taking dot product of the above equation with g , we conclude that ( 1 + n cos ( θ n ) ) ( i = 1 ∑ n + 1 λ i ) = 0 Which implies cos ( θ n ) = − n 1 provided ∑ i = 1 n + 1 λ i = 0 , i.e., the vectors are affinely independent, i.e., they enclose a strictly positive volume in R n . See my comment below for a proof.

Hence, for n = 4 , the answer is − 0 . 2 5 and lim n → ∞ θ n → 2 π .